Our mission at Syntegra is to democratize healthcare data and help accelerate real change for patients. From the start, we recognized that the current paradigm of data access in medicine results in an ongoing conflict between the desire to derive insights and the ethical mandate to protect patient privacy. As the use and complexity of data has evolved in the eras of the EHR and big data, privacy techniques have also had to adapt and grow. And the advanced use and need for large scale data has led to synthetic data becoming an increasingly important tool in unlocking the vast amount of data in a secure way.

To mark Data Privacy Day, I’m sharing a look back on the history of data privacy in healthcare, how it led to where we are today, how synthetic data fits into the data privacy landscape and why synthetic data is an important tool for maintaining privacy and trust.

A Brief History of Data Privacy in Healthcare

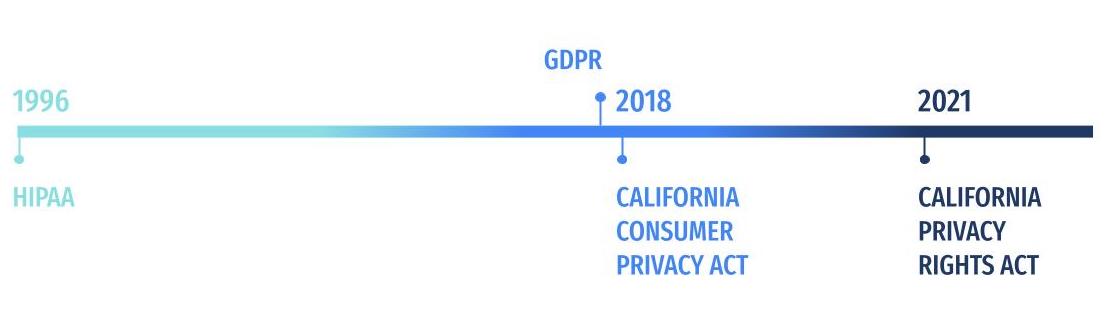

The Health Insurance Portability and Accountability Act of 1996 (HIPAA) was designed to regulate the insurance industry and created national standards to safeguard patients’ sensitive health information. Subsequently, HHS issued the following rules:

- The HIPAA Privacy Rule was issued to implement the requirements of HIPAA and provides standards to address the use and disclosure of individuals’ “protected health information” by providers, health plans, clearinghouses and business associates. This rule also includes standards for an individual’s right to understand and control how their health information is used.

- The HIPAA Security Rule specifically protects “electronic protected health information” (ePHI) under the standards of the Privacy Rule.

In 2018, the enactment of the General Data Protection Regulation (GDPR) effectively changed data privacy laws globally, enforcing unprecedented regulation on data protection and privacy in the EU, including the transfer of personal data outside the EU. A key goal was to improve individual control over their own personal data.

That same year, the California Consumer Privacy Act (CCPA), one of the first data privacy laws in the U.S., put the rights of consumer data back into their own hands.

More recently, the California Privacy Rights Act (CPRA) amended the CCPA, expanding many of its provisions, adding several new rights, and creating the California Privacy Protection Agency. The CPRA is set to take effect in January 2023.

Current Approaches to Protecting Healthcare Data Privacy

Since the enactment of HIPAA in 1996, two methods of de-identifying healthcare data have been used to comply with HIPAA standards: “Safe Harbor” and “Expert Determination.”

The Safe Harbor method involves the removal of 18 types of key identifiers (such as name, email, social security number, etc.) from the data, so that what’s remaining in the data cannot identify any individual.

Unfortunately, today it is difficult to fully guarantee that a person cannot be identified, and there is a significant loss in data quality when using Safe Harbor.

This is why a more common practice now is that of “expert determination,” a process of using statistical methods to determine and provide third-party certification of “low disclosure risk.” While expert determination does retain greater data usability, the process is time consuming, expensive and not standardized. And, perhaps most importantly, the quality and utility of the data still results in a lack of granularity that limits the data’s usability, particularly in areas where it is needed the most, such as in rare disease research or genomics.

Furthermore, a determined adversary with enough compute resources can stage a re-identification attack on any de-identified dataset, as was famously demonstrated in a 1997 attack where the discharge record for the then-Governor of Massachusetts was re-identified using simple demographic information such as date of birth, zip code and gender. This attack resulted in reduced confidence in de-identified data, as well as stronger scrutiny in expert determination and a further reduction of the quality of de-identified datasets.

In recent years, we’ve seen a number of additional approaches proposed for privacy preservation:

- Homomorphic encryption provides a way to perform mathematical computation on encrypted messages, resulting in very strong guarantees of encryption; however, this technique suffers from significant performance degradation when dealing with anything but very small datasets.

- Federated learning is a framework for implementing machine learning models in a federated manner such that agents collaborate to optimize the learning goal, without any of the parties seeing data from the other. Here the main challenge is the complex and often fragile infrastructure needed at each agent/node.

- Differential privacy provides a mechanism that optimally adds noise to query results to ensure that information about individuals in the underlying dataset is protected, given a certain “privacy budget,” or set of parameters. Although theoretically sound, differential privacy in practice is limited in application due to reduced data quality (due to the added noise) and the need to adopt DF-aware tooling.

A New Approach: Synthetic Data

Synthetic data provides a novel alternative to these methods, expanding access to highly accurate data at a more granular level while still completely preserving patient privacy. Synthetic data can be thought of as realistic but not real, or data that looks and acts like real data, but does not include any real patient records, opening up significant opportunities for data-driven advances in the healthcare space.

Privacy You Can Trust

While synthetic data is generated by a statistical sampling process, it is private by construction. At Syntegra, we felt that in the case of healthcare data, we wanted to have further proof that the generated synthetic data introduces minimal, if any, disclosure risk.

This is why we’ve developed a set of industry-standard metrics, in partnership with Mirador Analytics, to prove that synthetic data is private. Created in easily understood terms of disclosure risk, these metrics can demonstrate the safety of synthetic data and its low disclosure risk even against the most sophisticated adversarial attacks, such as attribute inference or membership inference.

Synthetic Data: Accelerating AI/ML and the Future of Healthcare

Due to its strong statistical fidelity compared to the real data, Syntegra’s synthetic data can play a major role in the development and adoption of machine learning and artificial intelligence in healthcare. Given it is not limited by strong governance, data use agreements or secure environments, synthetic data can be quickly deployed in any modern AI/ML environment and used efficiently and effectively to develop predictive models at scale. All without any additional risk to patient privacy.

There is a tremendous amount of secondary health data that cannot be used to its full potential due to privacy concerns. Synthetic data offers a new way of leveraging data at a greater scale for research and analytics across healthcare, while protecting patient privacy in a way that goes beyond even HIPAA or GDPR compliance.